Measuring Developer Productivity in the Age of AI

When Traditional Metrics Stop Working

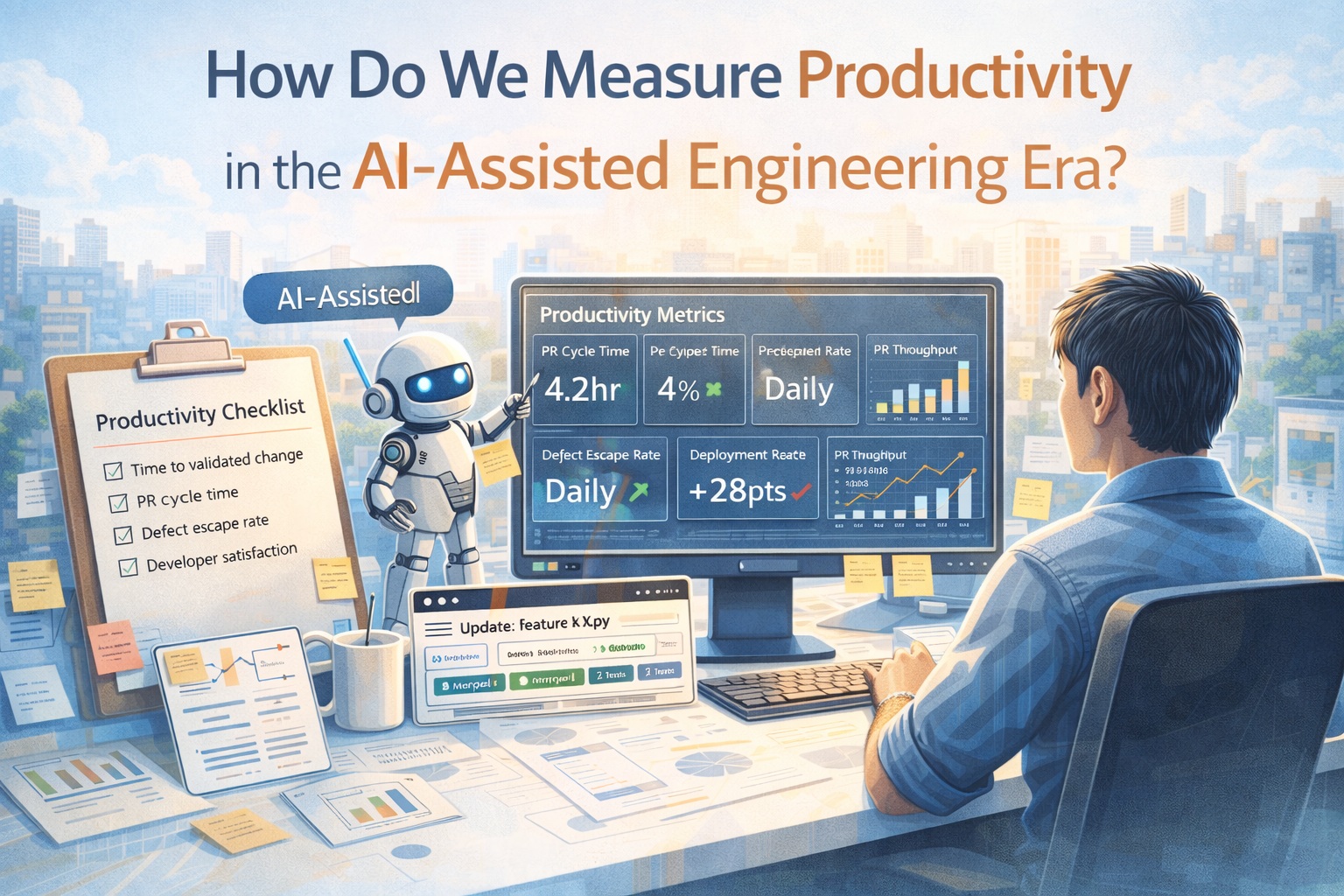

AI-assisted development is no longer an experiment. It is part of everyday engineering work across organizations of all sizes. Tools powered by large language models generate code, propose refactors, write tests, summarize pull requests, and coordinate multi-step engineering tasks.

This creates a fascinating and uncomfortable challenge: productivity is clearly improving, but it is becoming much harder to measure accurately.

Think about it. Counting lines of code has always been a flawed proxy for value, but in a world where an AI agent can scaffold an entire module in seconds, this metric becomes almost meaningless. Commit counts ignore the difference between a thoughtful architectural decision that takes a day and a hundred auto-generated boilerplate files. Story points fluctuate because AI accelerates some tasks dramatically while leaving others untouched.

The real question engineering leaders should be asking is not "Are developers faster?" but rather: "Are we delivering better outcomes, more safely and sustainably?"

In my previous posts, I explored how DevOps foundations prepare systems for agents, how the engineer's role is evolving, how humans and agents collaborate through IDEs and pull requests, how to design software for an agent-first world and how great engineers communicate with AI through specifications. This post tackles a question that matters to every engineering leader: how do we actually measure productivity when AI is part of the team?

The Problem with Activity Metrics

For years, teams have tracked signals like commit counts, story points completed, hours logged, and lines of code written. These still provide some context, but they should never be confused with productivity itself.

Here is why this distinction matters more now than ever:

A developer using GitHub Copilot might generate 300 lines of well-structured code in the time it previously took to write 50. Does that mean they are six times more productive? Not necessarily. What if half of those lines introduce subtle bugs that surface weeks later? What if the generated code duplicates logic that already exists elsewhere in the codebase?

Conversely, a developer who spends an entire morning writing a clear specification and acceptance criteria, then has the GitHub Copilot coding agent implement it in a single pull request, might show very little "activity" in traditional metrics. Yet their output could be orders of magnitude more valuable.

Activity is not productivity. Output is not outcome.

Shifting to Outcome-Focused Signals

In an AI-enabled environment, outcome-focused signals paint a much clearer picture of what is actually happening.

Cycle Time: The Most Honest Metric

Cycle time, measured from idea to production, often becomes the clearest indicator of real productivity gains. If AI reduces the time needed to design, implement, review, and deploy a change, the organization gains genuine agility. This metric is hard to game and reflects the entire value stream, not just isolated steps.

Pull Request Quality

Are reviews becoming smoother? Are there fewer revision cycles? Are discussions shifting from syntax nitpicks to architecture and product value? When AI handles the boilerplate, reviewers can focus on what matters: correctness, security, performance, and alignment with the overall design. This is a qualitative shift that numbers alone cannot capture, but that teams feel immediately.

Defect Escape Rate

Faster coding is only valuable if reliability stays stable or improves. This is perhaps the most critical check on AI-driven productivity claims. If AI-generated code introduces subtle bugs, security vulnerabilities, or edge-case failures, then apparent speed gains are hiding future operational costs. A team that ships twice as fast but generates 50% more production incidents has not actually become more productive.

Developer Experience

Surveys, internal feedback loops, and retention trends reveal whether AI tools genuinely reduce cognitive load or simply shift it elsewhere. A tool that generates code faster but forces engineers to spend more time reviewing, debugging, and fixing that code is not a net win. The best signal here is when developers report spending more time on interesting, high-impact work and less time on repetitive tasks.

How AI Changes the Development Workflow (Unevenly)

One of the trickiest aspects of measuring AI productivity is that improvements are not evenly distributed across the development lifecycle.

During ideation and design, LLMs help engineers explore approaches faster, compare architectural options, and document tradeoffs. This shortens the "blank page" phase and encourages experimentation. But this gain is almost invisible in traditional metrics.

Inside the IDE, AI pair programmers reduce time spent on repetitive scaffolding, test generation, and API usage discovery. Developers move more quickly from intent to working prototype. This is the most visible improvement, and it is the one most commonly measured, but it represents only a fraction of the total workflow.

In pull requests, AI can summarize changes, suggest improvements, and identify potential risks. This can transform reviews into higher-level conversations about correctness and maintainability. The productivity gain here shows up as faster review turnaround, but its real value is in higher-quality decisions.

At the system level, emerging agent workflows automate multi-step tasks such as dependency upgrades, documentation refreshes, or large-scale refactoring campaigns. These are areas where productivity gains compound over time, but they are also the hardest to attribute and measure.

Because improvements occur at different stages, measuring only one step of the pipeline will always miss the bigger picture.

Metrics That Actually Tell You Something Useful

Teams experimenting with AI-assisted development often find value in combining quantitative and qualitative signals. Here is a framework that works well in practice:

| Metric | What It Reveals | Watch Out For |

|---|---|---|

| Lead time for changes | End-to-end delivery speed | Can decrease while quality drops |

| Review turnaround time | Collaboration efficiency | May reflect reviewer availability, not code quality |

| Change failure rate | Reliability under speed | Lagging indicator; problems surface weeks later |

| Time spent on toil | Where AI adds real value | Self-reported; requires trust and honesty |

| Developer satisfaction | Whether tools genuinely help | Survey fatigue can skew results |

Lead time for changes remains one of the most reliable indicators. If it decreases while system stability holds steady, productivity is likely improving in a meaningful way. This metric aligns directly with the DORA (DevOps Research and Assessment) framework that many organizations already track.

Review turnaround time can reveal whether collaboration is becoming more efficient. Faster reviews often signal clearer code, better automated checks, and improved communication.

Change failure rate ensures that speed is not coming at the cost of reliability. This metric acts as a crucial safety valve.

Time spent on toil is a powerful lens. If developers consistently report less time writing boilerplate, searching documentation, or debugging trivial issues, AI is delivering real value, even if traditional metrics appear unchanged.

Self-reported developer satisfaction should not be underestimated. Engineers are usually quick to notice whether tools genuinely help them focus on meaningful work or just add noise to their workflow.

The Pitfalls That Trip Up Most Organizations

Expecting Immediate, Uniform Gains

AI adoption involves learning curves, workflow adjustments, and cultural shifts. Early metrics will look noisy. Some teams will see dramatic improvements in the first month; others will see minimal change or even temporary slowdowns as developers learn new patterns. This is normal. Give the data at least a quarter before drawing conclusions.

Confusing Correlation with Causation

Introducing AI often coincides with other improvements: better CI pipelines, clearer coding standards, renewed documentation practices, new team formations. Productivity gains usually come from the combination of all these changes. Attributing everything to the AI tool alone is misleading and can lead to poor investment decisions.

Measuring Usage Instead of Impact

Knowing how often a suggestion is accepted, how many chat messages are sent, or how many prompts are issued daily can be interesting for adoption tracking. But these numbers alone do not prove business value. A developer who accepts 90% of AI suggestions but produces buggy code is not more productive than one who accepts 30% but delivers clean, well-tested features.

Creating a Surveillance Culture

There is a real risk of pressuring developers to justify AI through constant measurement. Excessive monitoring undermines trust, discourages experimentation, and ultimately slows adoption. The goal of measurement should be to understand what is working and what needs adjustment, not to rank individual developers by their AI usage.

Ignoring the Total Cost of Quality

Speed metrics that ignore downstream costs are dangerous. If AI-generated code requires more review time, more debugging, more incident response, or more onboarding complexity for new team members, then the "productivity gain" might be an illusion. Always measure the full cost of delivering and maintaining software, not just the writing phase.

Practical Strategies for Teams and Leaders

Establish a baseline before large-scale rollout. Even a few weeks of pre-adoption metrics on cycle time, change failure rate, and developer satisfaction will give you something to compare against. Without a baseline, every improvement is anecdotal.

Define success in terms of outcomes that matter to your organization. For some teams, this means faster feature delivery. For others, it could be reduced incident rates, improved onboarding speed for new engineers, or the ability to tackle technical debt that has been postponed for years.

Combine system metrics with human feedback. Dashboards tell part of the story, but developer interviews and retrospectives often reveal the real workflow changes. Schedule regular check-ins where engineers can share what is working and what is frustrating.

Invest in enablement, not just tooling. Training developers on effective prompting, code review practices for AI-generated changes, and secure usage patterns often has a larger impact than the tool choice itself. A well-trained team with a good tool will outperform an untrained team with a great tool every time.

Treat measurement as iterative. As AI capabilities evolve, the signals that matter today may not be the same ones that matter six months from now. Build a measurement practice that can adapt, not a rigid framework that becomes outdated.

Share findings openly across the organization. When teams discover what works and what does not, that knowledge should flow freely. Create internal write-ups, host brown bag sessions, and build a knowledge base of AI productivity patterns specific to your organization.

The Bigger Picture: What Productivity Really Means Now

As generative AI and agent-based development continue to mature, productivity will increasingly be defined by how well humans and intelligent tools collaborate. The most successful teams will not be those that generate the most code, but those that learn the fastest, adapt the safest, and deliver the clearest value to users.

Measuring that kind of productivity requires moving beyond simplistic metrics and embracing a broader view of engineering effectiveness. It means accepting that some of the most valuable AI-driven improvements, like reduced cognitive load, faster learning curves for new team members, and more thoughtful code reviews, are difficult to quantify but profoundly important.

In the AI era, productivity is no longer just about speed. It is about amplifying human judgment, reducing unnecessary friction, and creating space for developers to focus on what truly matters: building software that solves real problems for real people.

If you are exploring AI-assisted development in your organization, start with one workflow, measure outcomes carefully, and expand based on real evidence rather than assumptions. The goal is not just to code faster. It is to build better software, and to know, with confidence, that your team is doing exactly that.